We can't say enough about how lucky we are that our partnerships provide some of the most breakthrough, transformative offerings in the industry. Today is no different as we partner with Cerebras Systems to announce the availability of the all-new Cerebras AI Model Studio.

Customers can use the Cerebras AI Model Studio to train GPU-impossible sequence lengths and keep their trained weights, all with predictable fixed pricing, a faster time to solution, and unprecedented flexibility and ease of use. Hosted on the Cerebras Cloud @ Cirrascale, this new offering enables customers to train generative pre-trained Transformer (GPT)-class models, including GPT-J, GPT-3, and GPT-NeoX, on the industry-leading Cerebras Wafer-Scale Cluster of CS-2 systems.

When training Large Language Models (LLMs), customers continue to express just how challenging and expensive it is. Multi-billion parameter models require months to train on clusters of GPUs, not to mention needing a team of engineers experienced in distributed programming and hybrid data-model parallelism. It is a multi-million dollar investment that many organizations simply cannot afford.

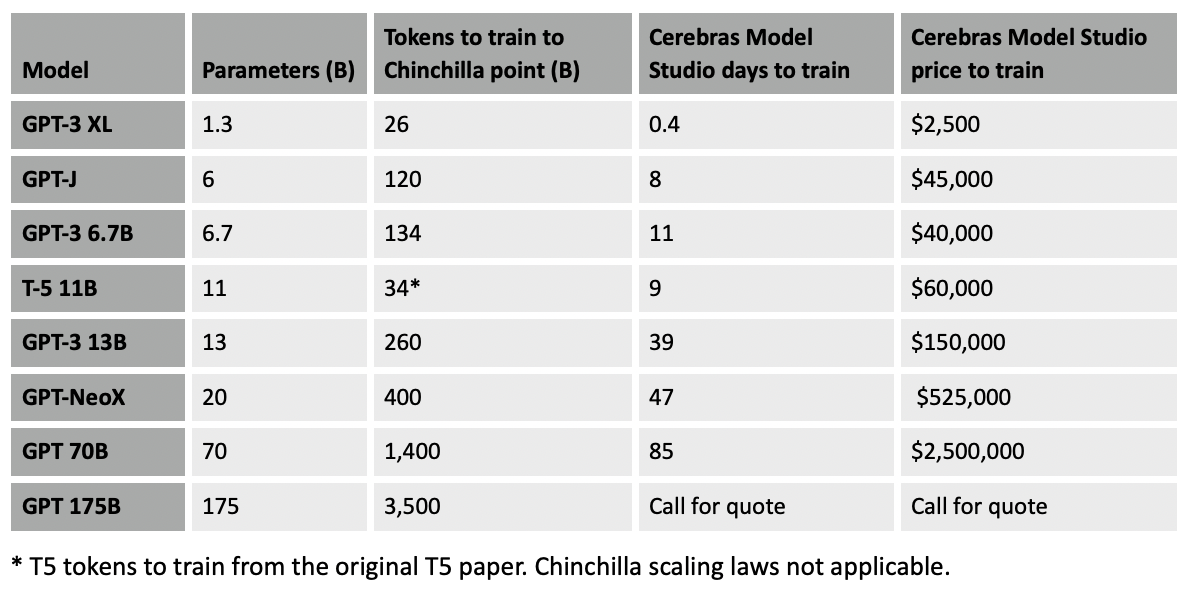

The Cerebras AI Model Studio offers users the ability to train GPT-class models at half the cost of traditional cloud providers and requires only a few lines of code to get going. Users can choose from state-of-the-art GPT-class models, ranging from 1.3 billion parameters up to 175 billion parameters, and complete training with 8x faster time to accuracy than on an A100 GPU cluster. Users have cloud access to the Cerebras Wafer-Scale Cluster, which enables GPU-impossible work with first-of-its-kind near-perfect linear scale performance. With the Cerebras AI Model Studio, users can access up to a 16-node Wafer-Scale Cluster and train models using longer sequence lengths of up to 50,000 MSL, opening up new opportunities for exciting research.

With every component optimized for AI work, the Cerebras Cloud @ Cirrascale delivers more compute performance at less space and power than any other solution. Depending on workload, from AI to HPC, it delivers hundreds or thousands of times more performance than legacy alternatives but uses only a fraction of the space and power. Cerebras Cloud is designed to enable fast, flexible training and low-latency data center inference, thanks to greater compute density, faster memory, and higher bandwidth interconnect than any other data center AI solution.

The Cerebras AI Model Studio is available now. For a limited time, users can sign up for a free 2-day trial evaluation run. Customers can begin using the Cerebras AI Model Studio by visiting https://cirrascale.com/cerebras.